Information theory defines how much information a message contains regarding the probabilities with which the symbols that make it up are likely to occur. It ushered in the age of information. It established limits on the efficiency of communications, allowing engineers to stop looking for codes that were too effective to exist. It is basic to today’s digital communications—phones, CDs, DVDs, the Internet. Information theory led us to an efficient error-detecting and error-correcting codes, used in everything from CDs to space probes. Applications include statistics, artificial intelligence, cryptography, and extracting meaning from DNA sequences.

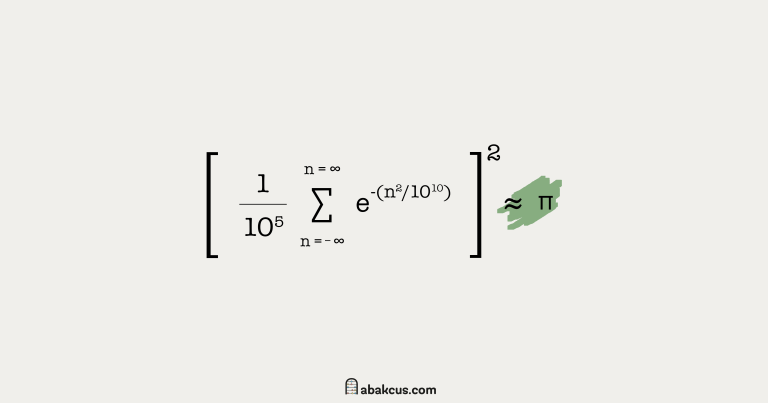

The Formula to Get 42 Billion Digits of π

While writing "7 Utterly Well-written Math Books About Pi," I found a very interesting math formula that will give you 42 consecutive digits of π accurately but is still wrong.